Day 22 - Thoughts on Programming Language design

11 Mar 2017 100daysofwriting · language-design · programmingI was discussing Cloudbleed with my friend, Kalyan Kumar. He had this to say about it:

And then … The final problem was that the generated C code didn’t have proper checking for the pointer that breaks the code. Now this kind of stuff are the biggest problems with C.

Secondly, most of the code currently at the core of the system is C. Take anything, finally it will be converted to C, or uses some code that is C.

I don’t understand one thing. People keep blaming C for how it manages most stuff. But when any kind new programming languages, structures that people make, they use C at their heart to work. The new things are simply wrappers around C. If people are going to keep blaming C, they should invent something that can replace C more fundamentally, at their core.

The last message is very interesting. In the past few weeks, and even in the

last semester, I have been writing a lot of C. About 3500 lines of C, since

the first week of January. About 2000 lines in the previous semester. All this

code is pretty heavy with data structures(malloc, free): Float matrices, A

collection of Float matrices in a 3D Float array, integer arrays, transforms on

the whole table, etc etc. All of this has to be dynamically allocated on the

basis of whatever input the user enters. So, that’s a lot of memory being

allocated and freed in the heap.

So, when people blame C for managing pointers and memory in general, it’s about

the SEGFAULTs that people talk about. C trying to access memory that’s not

it’s own, that hasn’t been declared by it, but just happens to be lying around

some other memory that was declared.

C does pointers well.

- you have to think a lot about the sizes that are being allocated

- you learn a lot more about design and literally how arrays of higher dimensions work.

- you also happen to learn about how to decide what arguments should be passed by value, passed by reference, which ones should be pointers etc.

That is invaluable. So, we have established that it’s a great language to “LEARN”. A great Academic tool.

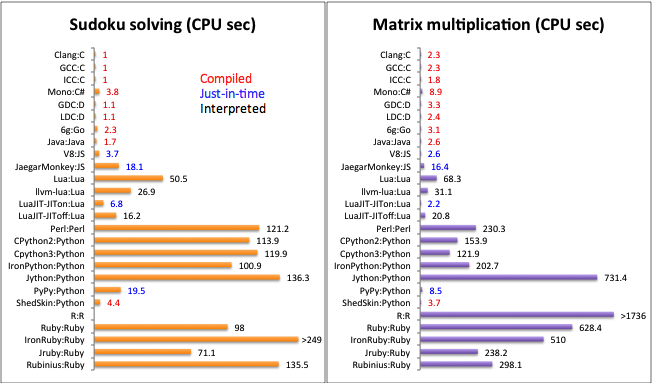

But when you have to use it somewhere in the real world, that’s when things become irritating. I think that languages need to be designed so that the details are abstracted out but we can still hang on to some performance.

An ideal language would have a design where

- It is hard to make mistakes inadvertently

- The tooling around the language enforces this

Java, inside Android Studio, comes pretty close to this ideal. There are caveats.

Java does a good job of this by actively discouraging that you change anything passed as an argument, by passing everything by reference. Obviously, that isn’t ideal because then you have to return values and re-assign stuff and so on and so forth. In applications like Android, this is really annoying. Other approaches that the language takes towards types, object oriented design makes the language very very long. Lots of characters even for the simplest stuff.

Consider this piece of code that calls some functions whenever a timer runs out:

mTimer.schedule(new TimerTask() { @Override public void run() { //

cancel everything here mTimer.cancel(); mTimer.purge(); Helpers.debug("timeout",

"Time up for this question! MOVE ON!"); } }, ((long) (mAllottedTime * 1000)));

In case, the operation inside run() had to be done on the main thread, then it

would be some more lines of code where you would call runOnUiThread() and pass

it a Runnable() with some initialisation, etc etc. Incredibly verbose. And all

we are trying to do is schedule some task. (An example of this would be having

to touch a TextView or any UI element and change one of it’s fields:

mMessageForUser.setText("YOUR TIME IS UP!"))

Coming back to the point about making it hard for people to make mistakes, Java tried to avoid inadvertent exceptions by forcing people to wrap blocks that might throw exceptions in try-catch so that no exception ever goes unhandled. This immediately stops all crashes. Java also forces everything to be strongly typed. That’s a pain every single time because APIs change, and when they do you have to re-think a lot of the downstream code, or you should have had the insight to design it in an extensible manner in the beginning itself. In either case, it’s time spent improving old code that was working perfectly well, when you could have spent that time writing new code.

Also, another thing that’s probably useful when dealing with things like Android applications where the user might know about interruptions: All interruptions are handled seamlessly. The user doesn’t need to know that an app malfunctioned.

App crashed? Just restart the application and show them the first screen. People understand, you need not go out of your way to show them that the app was not designed properly and an obscure test that missed their test case nets happened and the app crashed.

TL;DR When writing code in IDEAL, it’s incredibly hard for programmers to make mistakes that could lead to crashes or catastrophic stuff like SEGFAULTS.

In IDEAL, it will be painfully obvious what arguments are passed by reference and what arguments are simply passed by values in IDEAL. Memory leaks would be avoided in IDEAL by having a good garbage collector, which will keep RAM usage to the minimum. The GC part is definitely harder than it sounds, and definitely a part of the responsibility lies with the developer.

IDEAL Is a hypothetical language that doesn’t exist as yet. (Or maybe it does, and I am too daft to have not tried it out yet)

P.S. Kotlin sounds like Java minus all the stuff that makes it so verbose. I haven’t tried it, but I would like to. It looks good!

POST #21 is OVER